Why Apache Kafka is the Backbone of Modern Data Streaming

Table of Contents

- What is Apache Kafka?

- Key Features of Apache Kafka

- Core Advantages of Using Apache Kafka

- Apache Kafka Architecture and Flow Process

- Real-World Use Cases

- Apache Kafak On Netflix

- Conclusion

What is Apache Kafka?

Apache Kafka is an open-source distributed event streaming platform used to build real-time data pipelines and streaming applications. Originally developed at LinkedIn, Kafka is now maintained by the Apache Software Foundation and is a critical component in modern data architectures.

It enables high-throughput, low-latency transmission of data between systems, supporting pub-sub (publish/subscribe) and stream-processing models.

Key Features of Apache Kafka

- High Throughput: Handles millions of messages per second.

- Fault Tolerance: Distributed and replicated architecture ensures resilience.

- Scalability: Horizontal scaling for both storage and processing.

- Durability: Stores data on disk with configurable retention.

- Real-time Stream Processing: Compatible with stream processing frameworks like Kafka Streams and Apache Flink.

- Multiple Consumers: Allows parallel consumption without data loss.

Core Advantages of Using Apache Kafka

1. 🚀 High Performance

Kafka is optimized for real-time processing and can ingest and distribute massive volumes of data with minimal latency.

2. 🔄 Decoupling of Systems

Kafka allows loose coupling between data producers and consumers. Systems can publish or subscribe to topics without depending on each other.

3. 💾 Persistent Storage

Unlike many traditional messaging queues, Kafka can store data persistently, allowing consumers to replay events for auditing or reprocessing.

4. ⚙️ Scalable Architecture

Kafka can be scaled horizontally by adding more brokers, partitions, or consumers.

5. 🔒 Reliability and Fault Tolerance

Data is replicated across multiple brokers. Even if a broker fails, data remains available.

6. 📈 Strong Ecosystem

Kafka supports integration with tools like:

- Apache Spark

- Apache Flink

- Elasticsearch

- Hadoop

- ksqlDB

Kafka Architecture and Flow Process

🧭 Kafka Flow Process:

[Producer] → [Kafka Broker] → [Topic Partition] → [Consumer Group]Components Explained:

- Producer: Sends data (events) to a Kafka topic.

- Broker: A Kafka server that stores data and serves client requests.

- Topic: Logical channel to which data is sent; partitioned for parallelism.

- Consumer: Reads data from topics; can be grouped into consumer groups.

- Zookeeper (or KRaft in newer versions): Manages Kafka cluster metadata.

🔁 Flow Diagram Description:

- Producers push messages to specific topics.

- Kafka divides each topic into partitions for load balancing and parallelism.

- Brokers store messages and manage partition assignments.

- Consumers subscribe to one or more topics and consume messages at their own pace.

- Consumers in the same group divide the partitions among themselves for parallel consumption.

Real-World Use Cases

1. Log Aggregation (e.g., Uber, Netflix)

Kafka collects logs from multiple services and delivers them to a central analytics platform like Elasticsearch or Splunk.

2. Real-Time Fraud Detection

Banks and fintech companies use Kafka to stream transaction data to ML models for real-time fraud scoring.

3. IoT Device Monitoring

Kafka ingests data from thousands of devices and sensors for real-time monitoring, alerting, and analysis.

4. Website Activity Tracking

Kafka is widely used to track user clicks, page views, and interactions in real time (e.g., LinkedIn, Airbnb).

5. Microservices Communication

Kafka serves as an event bus between microservices, ensuring asynchronous and decoupled communication.

Apache Kafka vs Traditional Messaging Systems

| Feature | Apache Kafka | RabbitMQ / ActiveMQ |

| Message Retention | Long-term storage | Deletes after consumption |

| Throughput | Very high | Moderate |

| Scalability | Horizontal scaling | Limited by queues |

| Architecture | Distributed | Centralized |

| Replay Capability | ✅ Supported | ❌ Not supported |

| Use Case | Streaming, Big Data | Task Queues, RPC |

✅ Use Cases of Apache Kafka at Netflix

Netflix processes hundreds of billions of events daily, from user actions to system logs. Kafka provides the backbone for this data pipeline, enabling scalable, real-time event streaming across their microservices architecture.

1. Real-Time Operational Monitoring

- Netflix uses Kafka to collect telemetry data such as:

- Service health metrics

- Application logs

- Latency and error rates

- Data is sent via Kafka to monitoring tools (e.g., Atlas, LIP, Insight) for real-time dashboards and alerts.

2. Personalization & Recommendations

- Kafka helps stream user behavior data (watch history, browsing, clicks) into ML pipelines.

- This real-time data trains and updates personalization models, influencing:

- What thumbnails are shown

- What movies are recommended

- What trailers autoplay

3. Edge Event Ingestion (from Devices)

- Devices like smart TVs, mobile apps, and browsers send client-side events (play, pause, buffer, search).

- Kafka ingests these events into a unified data pipeline to power analytics and decision-making.

4. A/B Testing and Experimentation

- Kafka transports experiment interaction events to Netflix’s A/B testing engine, allowing engineers to:

- Compare user experiences

- Validate new features

- Make data-driven UX decisions

5. Microservice Communication

- Kafka acts as an event bus between many of Netflix’s internal microservices.

- Enables asynchronous, event-driven architectures, reducing service coupling and increasing resilience.

6. Real-Time Video Quality Monitoring

- Kafka streams real-time QoS (Quality of Service) metrics like bitrate, buffering events, and error logs.

- These metrics are used to:

- Automatically adjust video quality (adaptive streaming)

- Troubleshoot issues in specific regions or devices

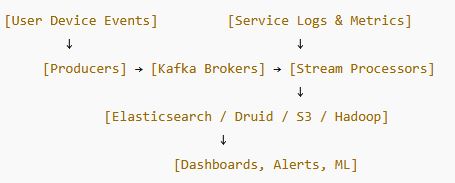

⚙️ High-Level Kafka Architecture at Netflix

- Producers: Netflix services and edge clients send events to Kafka.

- Kafka Brokers: Cluster of brokers handle load-balanced ingestion and storage.

- Consumers: Include Spark Streaming, Flink, internal tools, and ML pipelines.

- Sinks: Final data lands in S3 (for batch jobs), Elasticsearch (for fast querying), or databases for long-term storage.

📈 Scale at Netflix

- Processes > 1 trillion messages/day

- Kafka clusters handle gigabytes/second throughput

- Uses multi-region Kafka clusters for redundancy and latency optimization

🔐 Security and Compliance

Netflix customizes Kafka with:

- TLS encryption in-transit

- Service-to-service authentication

- Access control policies (ACLs) per topic

🔄 Integration with Other Netflix Tools

| Tool | Purpose | Integration with Kafka |

|---|---|---|

| Mantis | Real-time stream processing | Consumes Kafka topics for dashboards & ML |

| Atlas | Telemetry & monitoring | Kafka → Atlas pipeline for metrics |

| Flamescope | Performance analysis | Kafka events feed flame graphs |

| LIP | Log ingestion | Kafka transports structured logs |

Conclusion

Apache Kafka is not just a messaging system—it’s a streaming data platform that transforms how modern systems interact in real time. Its scalability, fault-tolerance, and performance make it ideal for high-demand environments like big data analytics, real-time monitoring, and event-driven microservices.

If your organization deals with large volumes of fast-moving data and requires real-time insights, Kafka is the tool that can unify your data pipelines and simplify architecture.

❓ Frequently Asked Questions (FAQ) about Apache Kafka and Modern Data Streaming

1. What is Apache Kafka?

Apache Kafka is an open-source distributed event streaming platform used to build real-time data pipelines and streaming applications. It enables high-throughput, low-latency transmission of data between systems using models like publish/subscribe and stream processing.

2. Why is Kafka considered the backbone of modern data streaming?

Kafka supports massive data throughput, fault tolerance, scalability, and real-time stream processing — making it ideal for handling continuous streams of data in modern distributed systems.

3. What are the key features that make Kafka strong for data streaming?

Kafka’s main strengths include high throughput, horizontal scalability, persistent storage of events, fault tolerance through replication, and support for multiple consumers processing in parallel.

4. How does Kafka differ from traditional messaging systems?

Unlike traditional message queues that delete messages after consumption, Kafka retains data for a configurable period, supports horizontal scaling, and can replay events — features critical for real-time analytics and big data use cases.

5. What are typical use cases of Kafka?

Kafka is widely used for log aggregation, real-time fraud detection, IoT device data ingestion, website activity tracking, and as an event bus for microservices communication.

6. How does Kafka support real-time processing?

Kafka works with stream processing frameworks like Kafka Streams or Apache Flink, allowing applications to transform, analyze, and act on data as it flows through the system.

7. Can Kafka integrate with other tools and systems?

Yes — Kafka has a rich ecosystem and integrates with platforms such as Apache Spark, Elasticsearch, Hadoop, ksqlDB, and more, making it a central hub for data movement and processing.

8. How does Kafka ensure reliability and fault tolerance?

Kafka replicates data across multiple brokers in a cluster. If one broker fails, replicas uphold availability and prevent data loss, which is essential for critical real-time systems.

9. What role does Kafka play in microservices architectures?

In event-driven microservices, Kafka decouples producers and consumers, acting as a central event bus that enables asynchronous communication and reduces tight dependencies between services.

10. Is Kafka suitable for large enterprises only?

While Kafka excels with high-volume data environments, its flexible design allows adoption in a range of organizations — though architectural complexity should be considered relative to specific use cases.